[X.com] by @deedydas

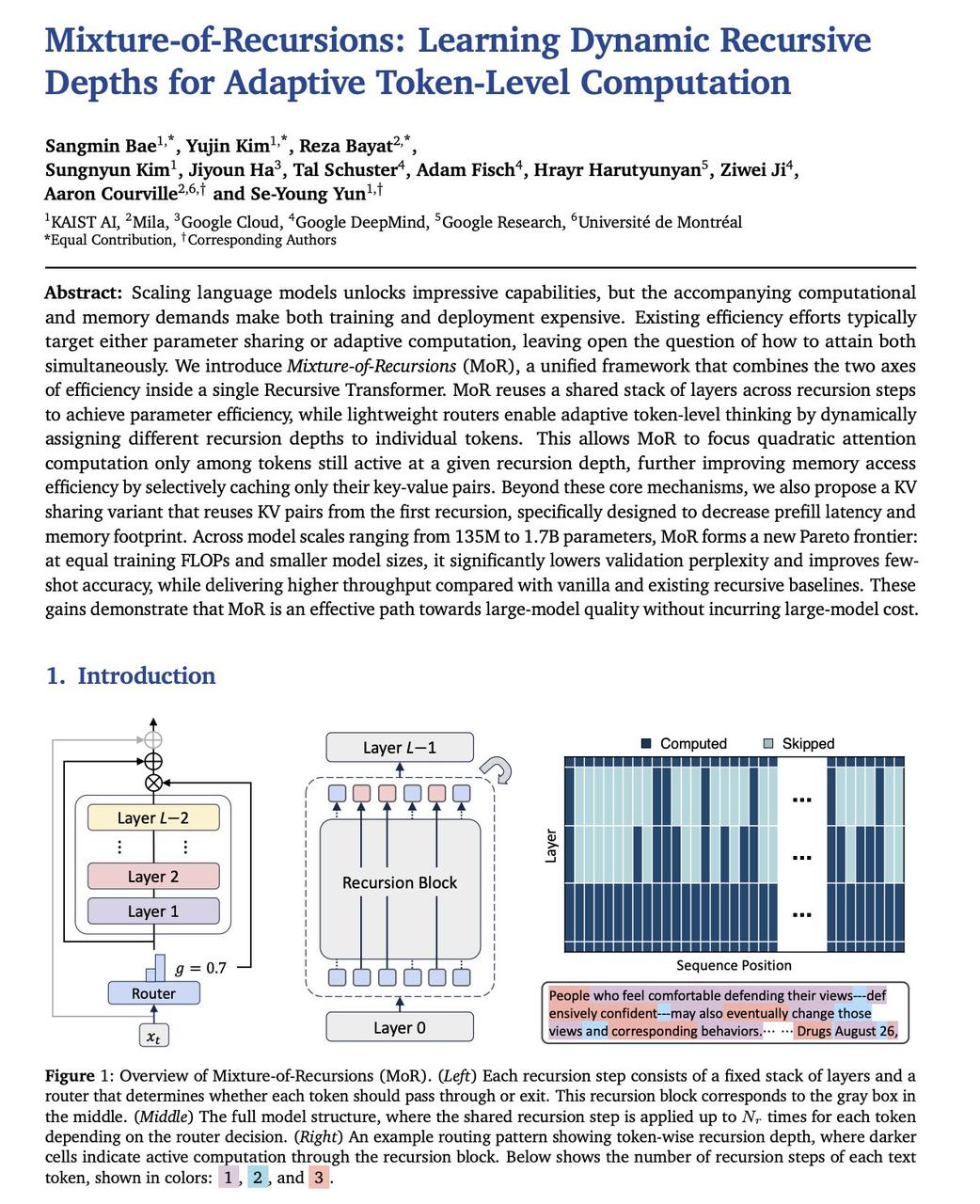

Google DeepMind just dropped this new LLM model architecture called Mixture-of-Recursions.

It gets 2x inference speed, reduced training FLOPs and ~50% reduced KV cache memory. Really interesting read.

Has potential to be a Transformers killer. https://t.co/LdrKmSy6tR